[AI] ollama download & run new LLM models + openweb ui + public access with ngrok + deepseek

date

Feb 29, 2024

slug

ai-ollama-llm-models

status

Published

summary

AGI技能

tags

ai

type

Post

Summary

安装过程

brew install ollama

# https://github.com/ollama/ollama?tab=readme-ov-file

# service at localhost:11434

# if wanna open to 0.0.0.0 for external service

launchctl setenv OLLAMA_HOST "0.0.0.0"

launchctl setenv OLLAMA_ORIGINS "*"

# brew services restart ollama

brew services start ollama

ollama serve # 如果是GUI(downloaded from ollama.com),则无需

# in another shell

# https://ollama.com/library

ollama run llama3

ollama run gemma:7b

ollama run mistral

ollama run mixtral

ollama run llama3.2:1b

# https://ollama.com/library/deepseek-r1:14b

ollama run deepseek-r1:14b

#

ollama run deepseek-r1:32b

# 64GB mem

ollama run deepseek-r1:70b

# remove

ollama list

ollama rm deepseek-r1:70b

curl http://localhost:11434/api/chat -d '{

"model": "mistral",

"messages": [

{ "role": "user", "content": "why is the sky blue?" }

]

}'

Customize a prompt

Models from the Ollama library can be customized with a prompt. For example, to customize the llama2 model:

ollama pull llama2

Create a Modelfile:

FROM llama2

# set the temperature to 1 [higher is more creative, lower is more coherent]

PARAMETER temperature 1

# set the system message

SYSTEM """

You are Mario from Super Mario Bros. Answer as Mario, the assistant, only.

"""

Next, create and run the model:

ollama create mario -f ./Modelfile

ollama run mario

>>> hi

Hello! It's your friend Mario.use docker

cd ~/_my/cloudsync/sh88qh_h1/SynologyDrive/__qh_tmp/data/ollama

mkdir ollama

cat > docker-compose.yml <<EOF

services:

ollama:

image: ollama/ollama

container_name: ollama

ports:

- "11434:11434"

#- "40434:11434" # for kxultra x.x.0.4

volumes:

- ./ollama:/root/.ollama

restart: always

networks:

default:

name: npm_nginx_proxy_manager-network

external: true

EOF

docker exec -it ollama ollama run llama3.1:1b

docker exec -it ollama ollama run llama3.3

docker exec -it ollama ollama run mistral

# ~32GB mem

docker exec -it ollama ollama run deepseek-r1:32b

# or just pull in the backgroud

docker exec -d ollama ollama pull deepseek-r1:16b

# for RAG

docker exec -it ollama ollama pull nomic-embed-text

GUI - ‣

brew install --cask ollamacGUI - ‣

docker run -p 3000:3000 ghcr.io/ivanfioravanti/chatbot-ollama:main

# in browser, go to

http://127.0.0.1:3000

GUI ‣

‣

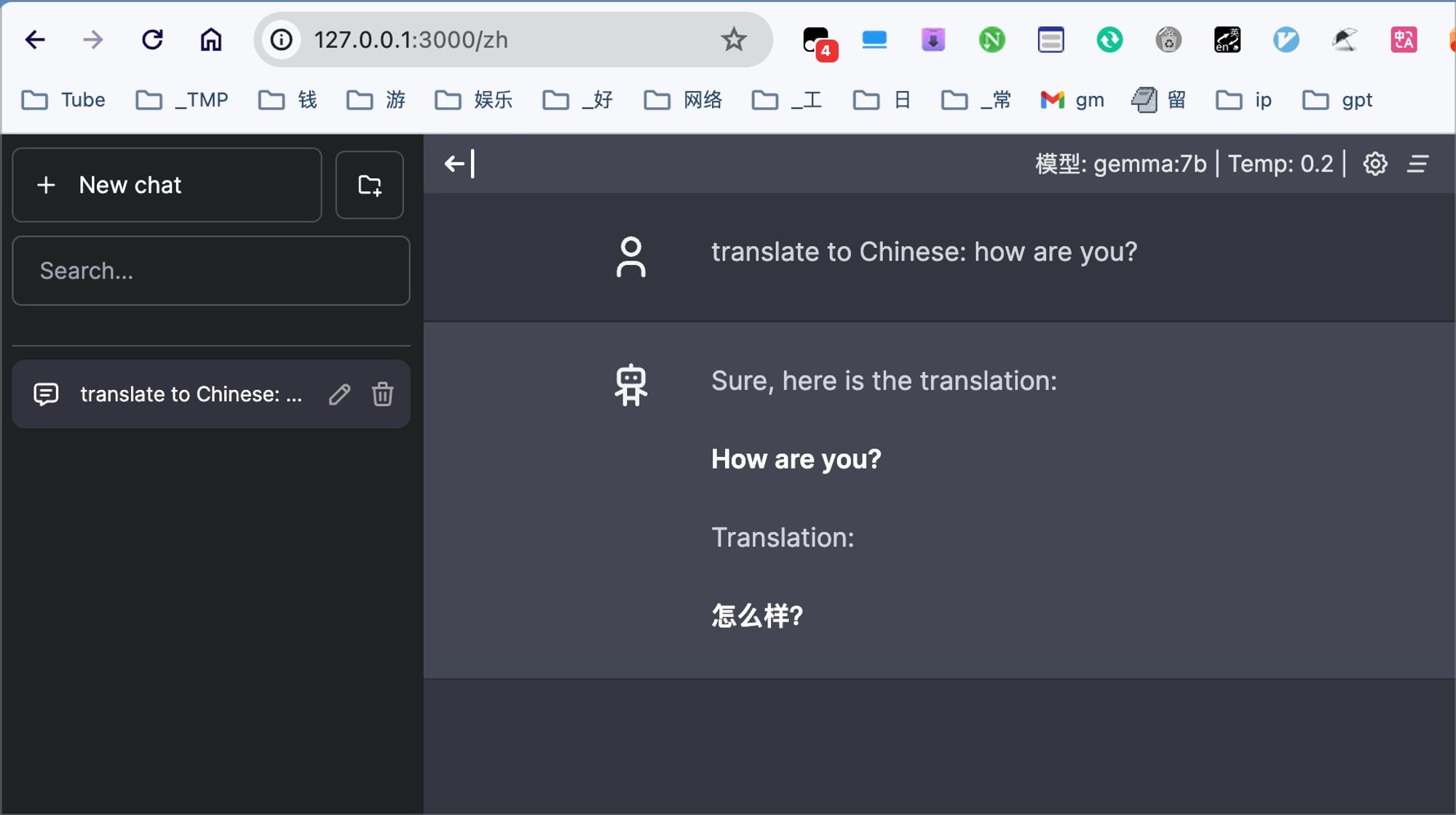

241214 Open WebUI ‣

cd ~/_my/cloudsync/sh88qh_h1/SynologyDrive/__qh_tmp/data/open-webui

mkdir open-webui

# seperate & independent webui

# Ollama is on your computer as native app, use this command

cat > docker-compose.yml <<EOF

services:

open-webui:

# main branch only has ui

image: ghcr.io/open-webui/open-webui:main

container_name: open-webui

restart: always

ports:

- "3000:8080"

extra_hosts:

- "host.docker.internal:host-gateway"

# if Ollama at different host

#environment:

# - OLLAMA_BASE_URL=https://example.com

# - OLLAMA_BASE_URL=http://ollama:11434

volumes:

- ./open-webui:/app/backend/data

networks:

default:

name: npm_nginx_proxy_manager-network

external: true

EOF

# bundle 2in1

# a built-in, hassle-free installation of both Open WebUI and Ollama, ensuring that you can get everything up and running swiftly

mkdir ollama

cat > docker-compose.yml <<EOF

services:

open-webui:

# ollama branch has both

image: ghcr.io/open-webui/open-webui:ollama

container_name: open-webui

restart: always

ports:

- "3000:8080"

volumes:

- ./ollama:/root/.ollama

- ./open-webui:/app/backend/data

networks:

default:

name: npm_nginx_proxy_manager-network

external: true

EOF

public access

ngrok http 11434 --host-header="localhost:11434"